Monetisation of your machine data

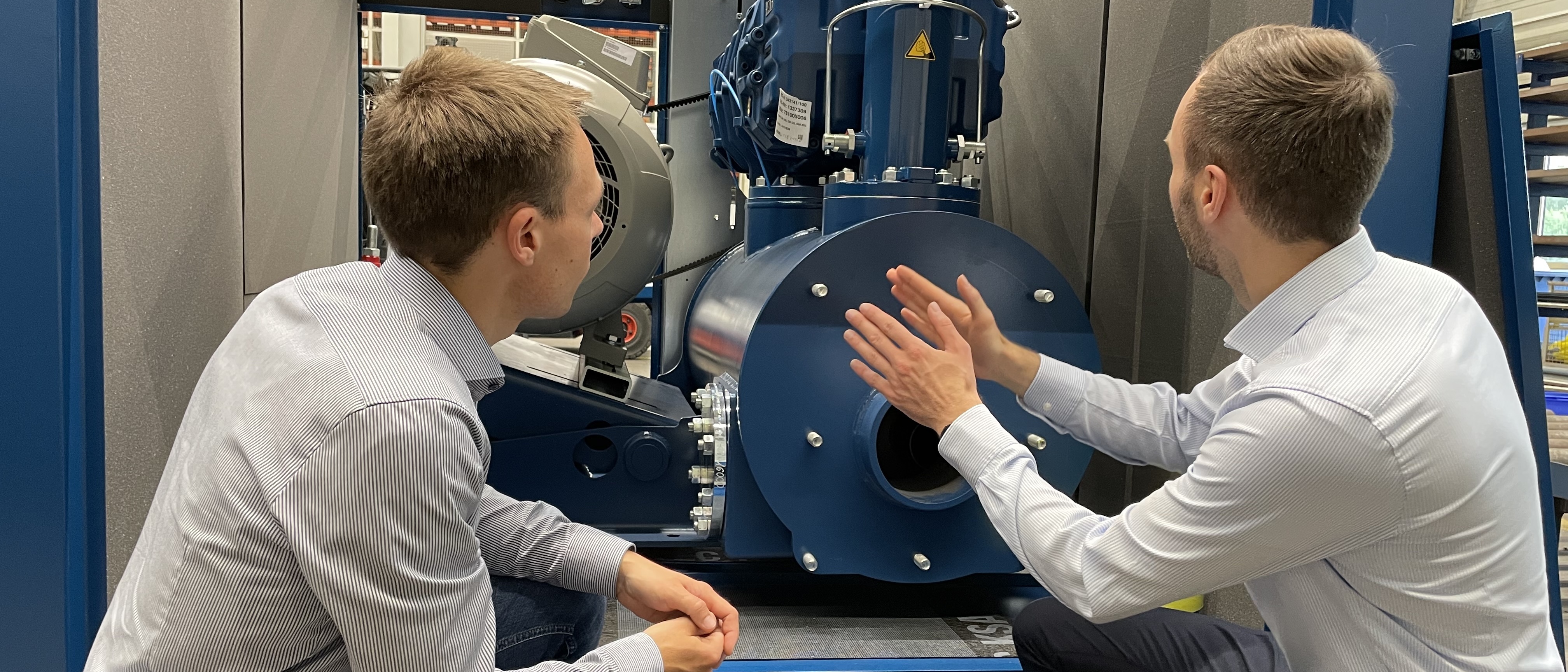

Insight into our way of working

In practice, implementing a data-based business model for your machines is not always the same as dealing with complex algorithms or artificial intelligence. As Albert Einstein would have said, we prefer solutions that are as simple as possible, but not simpler. In the following, we give you a brief insight into our way of working.

Easy integration into field applications

To enable you to make use of our sophisticated algorithms and models, we work with common methods for software deployment. Based on Docker containers, we are able to deploy our applications at your site, either locally or as a cloud service.

Especially with respect to cloud solutions, a high level of data security and trustworthiness is essential. With Microsoft Azure, we are using a well established platform to meet these requirements. The Azure Cloud offers excellent possibilities to realise a lean development and maintainance process of the source code and the application. Edge applications are running directly at the machine, on the other hand, applications with heavy performance or storage requirements are executed in the cloud. The Microsoft Azure Cloud provides also options for linking both worlds.